Chapter 1, Automata: The Methods and the Madness

This series of documents in this column are summarized from this book: Introduction to automata theory, languages, and computation.[1]

Why Study Automata Theory?

Introduction to Finite Automata

Automata theory is the study of abstract computing devices or "machines".

Finite automata are a useful model for many important kinds of hardware and software.

- Software for designing and checking the behavior of digital circuits.

- The "lexical analyzer" of a typical compiler, that is, the compiler component that breaks the input text into logical units, such as identifiers, keywords, and punctuation.

- Software for scanning large bodies of text, such as collections of Web pages, to find occurrences of words, phrases,or other patterns.

- Software for verifying systems of all types that have a finite number of distinct states, such as communications protocols or protocols for secure exchange of information.

Perhaps the simplest nontrivial finite automaton is an on/off switch (Figure 1).

Figure 2 shows another finite automaton that could be part of a lexical analyzer. The job of this automaton is to recognize the keyword then. It thus needs five states, each of which represents a different position in the word then that has been reached so far. These positions correspond to the prefixes of the word, ranging from the empty string (i.e., nothing of the word has been seen so far) to the complete word. We could consider the state "then" as accepting state.

Structural Representations

There are two important notations that are not automaton-like, but play an important role in the study of automata and their applications.

- Grammars are useful models when designing software that processes data with a recursive structure. The best-known example is a "parser", the component of a compiler that deals with the recursively nested features of the typical programming language, such as expressions-arithmetic, conditional, and so on. For instance,a grammatical rule like states that an expression can be formed by taking any two expressions and connecting them by a plus sign; this rule is typical of how expressions of real programming languages are formed. Later, we'll introduce context-free grammars, as they are usually called.

- Regular Expressions also denote the structure of data, especially text strings. The patterns of strings they describe are exactly the same as what can be described by finite automata. The style of these expressions differs significantly from that of grammars, and we shall content ourselves with a simple example here. The UNIX-style regular expression

[A-Z][a-z]*[ ][A-Z][A-Z], represents capitalized words followed by a space and two capital letters. This expression represents patterns in text that could be a city and state, e.g., Ithaca NY. It misses multiword city names, such as Palo Alto CA, which could be captured by the more complex expression[A-Z][a-z]*([ ][A-Z] [a-z]*)*[ ][A-Z][A-Z].

Automata and Complexity

Automata are essential for the study of the limits of computation. As we mentioned in the introduction to the chapter, there are two important issues:

- What can a computer do at all? This study is called "decidability," and the problems that can be solved by computer are called "decidable."

- What can a computer do efficiently? This study is called "intractability," and the problems that can be solved by a computer using no more time than some slowly growing function of the size of the input are called "tractable." Often,we take all polynomial functions to be "slowly growing," while functions that grow faster than any polynomial are deemed to grow too fast.

Introduction to Formal Proof

Perhaps more than other core subjects of computer science, automata theory lends itself to natural and interesting proofs, both of the deductive kind (a sequence of justified steps) and the inductive kind (recursive proofs of a parameterized statement that use the statement itself with "lower" values of the parameter).

Deductive Proofs

A deductive proof consists of a sequence of statements whose truth leads us from some initial statement, called the hypothesis or the given statement(s), to a conclusion statement. Each step in the proof must follow, by some accepted logical principle, from either the given facts, or some of the previous statements in the deductive proof, or a combination of these.

The hypothesis may be true or false, typically depending on values of its parameters. Often, the hypothesis consists of several independent statements connected bya logical AND. In those cases, we talk of each of these statements as a hypothesis, or as a given statement.

The theorem that is proved when we go from a hypothesis to a conclusion is the statement "if then ." We say that is deduced from .

Reduction to Definitions

In many other theorems, including many from automata theory, the terms used in the statement may have implications that are less obvious.

If you are not sure how to start a proof, convert all terms in the hypothesis to their definitions.

Here is an example of a theorem that is simple to prove once we have expressed its statement in elementary terms. It uses the following two definitions:

- A set is finite if there exists an integer such that has exactly elements in a set . We write , where is used to denote the number of elements in a set . If the set is not finite, we say is infinite. Intuitively, an infinite set is a set that contains more than any integer number of elements.

- If and are both subsets of some set , then is the complement of (with respect to ) if and . That is, each element of is in exactly those elements off that are not in .

Other Theorem Forms

Ways of Saying "If-Then"

Here are some of the other ways in which "if then " might appear.

- implies .

- only if .

- if .

- Whenever holds, follows.

- If holds, then follows.

- Whenever holds, holds.

In formal logic one often sees the operator in place of "if-then". That is, the statement "if then " could appear as in some mathematical literature.

If-And-Only-If Statements

In formal logic, one may see the operator or to denote an "if-and-only-if" statement. That is, and mean the same as " if and only if ".

When proving an if-and-only-if statement, it is important to remember that you must prove both the "if" and "only-if" parts. Sometimes, you will find it helpful to break an if-and-only-if into a succession of several equivalences. That is, to prove " if and only if ", you might first prove " if and only if " and then prove " if and only if ".

Theorems That Appear Not to Be If-Then Statements

Sometimes,we encounter a theorem that appears not to have a hypothesis. An example is the well-known fact from trigonometry:

Pythagorean Identity

TheoremAdditional Forms of Proof

In this section,we take up several additional topics concerning how to construct proofs:

- Proofs about sets.

- Proofs by contradiction.

- Proofs by counterexample.

Proving Equivalences About Sets

The Distributive Law of Union Over Intersection

TheoremAn element is in if and only if is in .

The elements of are all and only the elements of .

The Contrapositive

Every if-then statement has an equivalent form that in some circumstances is easier to prove. The contrapositive of the statement "if then " is "if not then not ".

A statement and its contrapositive are either both true or both false,so we can prove either to prove the other.

Proof by Contradiction

Another way to prove a statement of the form "if then " is to prove the statement: and not implies falsehood.

Counterexamples

In real life,we are not told to prove a theorem. Rather,we are faced with something that seems true - a strategy for implementing a program for example - and we need to decide whether or not the "theorem" is true. To resolve the question, we may alternately try to prove the theorem, and if we cannot, try to prove that its statement is false.

Theorems generallyare statements about an infinite number of cases, perhaps all values of its parameters. Indeed, strict mathematical convention will only dignify a statement with the title "theorem" if it has an infinite number of cases; statements that have no parameters, or that apply to only a finite number of values of its parameter(s)are called observations. It is sufficient to show that an alleged theorem is false in any one case in order to show it is not a theorem. The situation is analogous to programs, since a program is generally considered to have a bug if it fails to operate correctly for even one input on which it was expected to work.

It often is easier to prove that a statement is not a theorem than to prove it is a theorem.

Inductive Proofs

There is a special form of proof, called "inductive", that is essential when dealing with recursively defined objects.

Inductions on Integers

Suppose we are given a statement , about an integer , to prove. One common approach is to prove 2 things:

- The basis, where we show for a particular integer . Usually, or , but there are examples where we want to start at some higher , perhaps because the statement is false for a few small integers.

- The inductive step, where we assume , where is the basis integer, and we show that "if then ".

The Induction Principle: If we prove and we prove that for all , implies , then we may conclude for all .

More General Forms of Integer Inductions

Sometimes an inductive proof is made possible only by usinga more general scheme than the one proposed here, where we proved a statement for one basis value and then proved that "if then ". Two important generalizations of this scheme are:

-

We can use several basis cases. That is, we prove for some .

-

In proving , we can use the truth of all the statements

rather than just using . Moreover, if we have proved basis cases up to , then we can assume , rather than just .

Structural Inductions

Like inductions, all recursive definitions have a basis case, where one or more elementary structures are defined, and an inductive step, where more complex structures are defined in terms of previously defined structures.

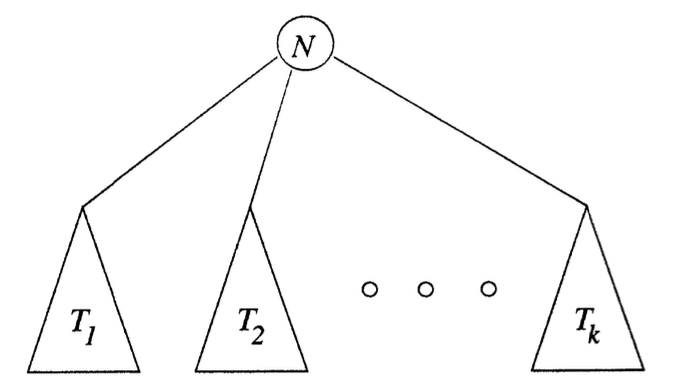

Inductive construction of a tree

DefinitionBasis: A single node is a tree, and that node is the root of the tree.

Induction: If are trees, then we can form a new tree as follows:

- Begin with a new node , which is the root of the tree.

- Add copies of all the trees .

- Add edges from node to the roots of each of the trees .

Figure 3 shows the inductive construction of a tree with the root from smaller trees.

Mutual Inductions

Sometimes, we cannot prove a single statement by induction, but rather need to prove a group of statements together by induction on .

Strictly speaking, proving a group of statements is no different from proving the conjunction (logical AND) of all the statements.

However, when there are really several independent statements to prove, it is generally less confusing to keep the statements separate and to prove them all in their own parts of the basis and inductive steps. We call this sort of proof mutual induction.

We can abstract the pattern for all mutual inductions:

- Each of the statements must be proved separately in the basis and in the inductive step.

- If the statements are "if-and-only-if," then both directions of each statement must be proved, both in the basis and in the induction.

The Central Concepts of Automata Theory

Alphabets

Alphabets

DefinitionAn alphabet is a finite, nonempty set of symbols. Conventionally, we use the symbol for an alphabet. Common alphabets include:

- , the binary alphabet.

- , the set of lower-case letters.

- The set of all ASCII characters, or the set of all printable ASCII characters.

Strings

Strings

DefinitionA string (or sometimes word) is a finite sequence od symbols chosen from some alphabet.

The Empty String

The empty string is the string with zero occurrences of symbols. This string, denoted , is a string that may be chosen from any alphabet whatsoever.

Length of a String

It is often useful to classify strings by their length, that is, the number of positions for symbols in the string. For instance, 01101 has length 5.

The standard notation for the length of a string is . For example, .

Power of an Alphabet

If is an alphabet, we can express the set of all strings of a certain length from that alphabet by using an exponential notation. We define to be the set of strings of length , each of whose symbols is in .

Note that , regardless of what alphabet is.

The set of all strings over an alphabet is , i.e. . That is, .

The set of nonempty strings from alphabet is denoted . Thus, two appropriate equivalences are:

- ;

- .

is an alphabet, is a set of strings (length = 1).

Concatenation of Strings

Let and be strings, then denotes the concatenation of and . That is, the string formed by making a copy of and following it by a copy of . More precisely, if is the string composed of symbols and is the string composed of symbols , then is the string of length .

Languages

A set of strings all of which are chosen from some , where is a particular alphabet, is called a language. If is an alphabet, and , then is a language over .

Notice that a language over need not include strings with all the symbols of , so once we have established that is a language over , we also know it is a language over any alphabet that is a superset of .

, the empty language, is a language over any alphabet.

, the language consisting of only the empty string, is also a language over any alphabet.

, the former has no strings and the latter has one string.

The only important constraint on what can be a language is that all alphabets are finite. Thus languages, although they can have. an infinite number of strings, are restricted to consist of strings drawn from one fixed, finite alphabet.

It is common to describe a language using a "set-former":

For example:

It is also common to replace by some expression with parameters and describe the strings in the language by stating conditions on the parameters. For example:

which is .

Problems

In automata theory, a problem is the question of deciding whether a given string is a member of some particular language. It turns out, as we shall see, that anything we more colloquially call a "problem" can be expressed as membership in a language. More precisely, if is an alphabet, and is a language over , then the problem is:

- Given a string in , decide whether or not is in .